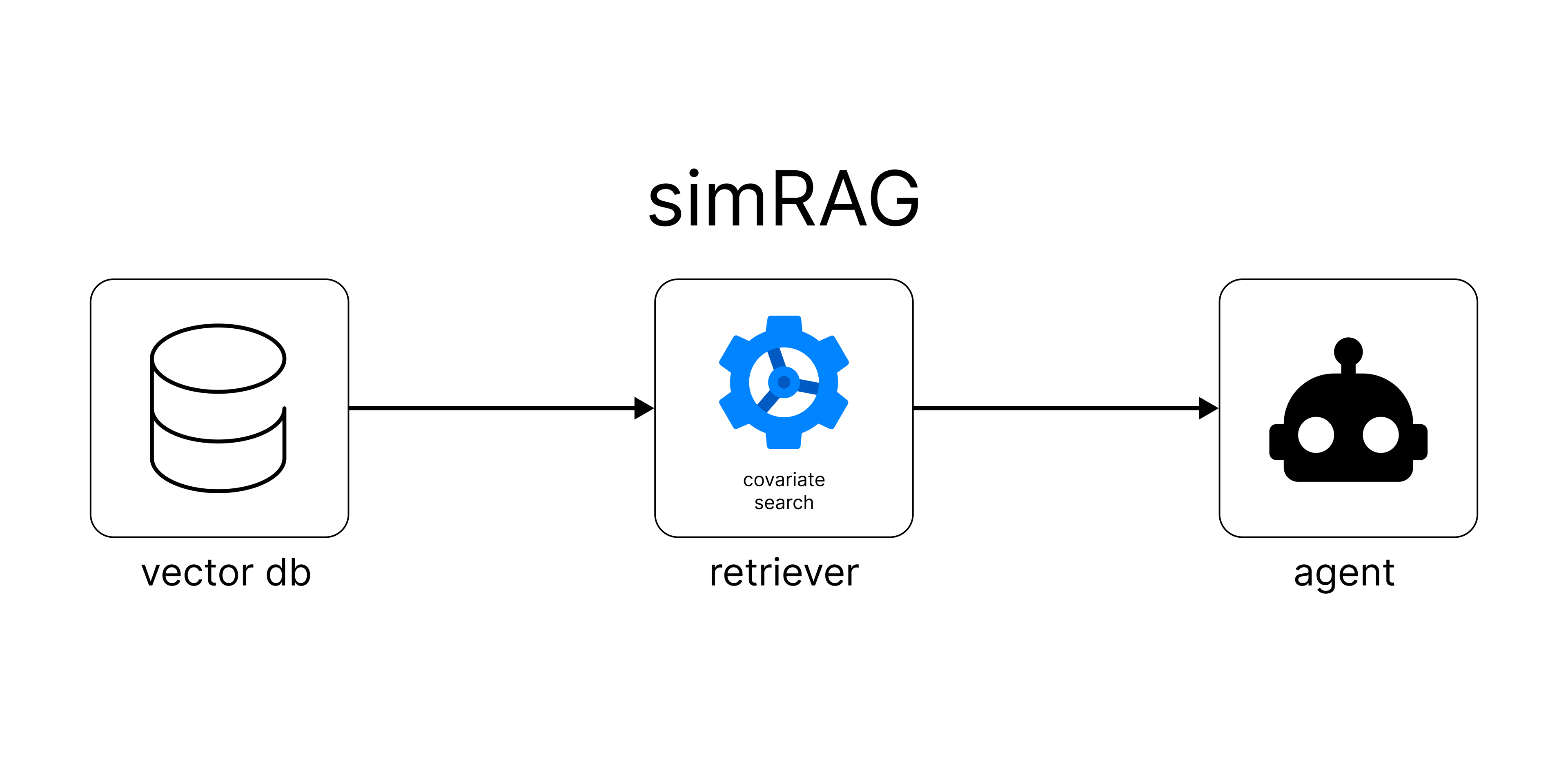

Introducing SimRAG: controlling RAG with Covariate Search

Michelangiolo Mazzeschi • 2025-03-05

introducing-simrag-controlling-rag-with-covariate-search

From the author of distribution-based score fusion (DBSF) - cited by Stanford, Redis, Qdrant…

Over one year ago, I started experimenting with multimodality, specifically applied to vector search. I became disappointed when I noticed that multimodal encoders, despite the huge step forward in retrieval technology, result in an overwhelming number of false positives. These models, processing a combination of different modalities in the same latent space, had obvious and unforgiving flaws that still make their adoption challenging.

Distribution-based score fusion

Improving an existing model (especially if it is trained on billions of images) it is a huge challenge, hence, I had to resort to pure math and algorithms to improve the state of my retriever. You could imagine my stupor when I found out that there was no way of combining the results of two different searches unless each sample was a document, but in my case, it was a combination of a text search and an image search.

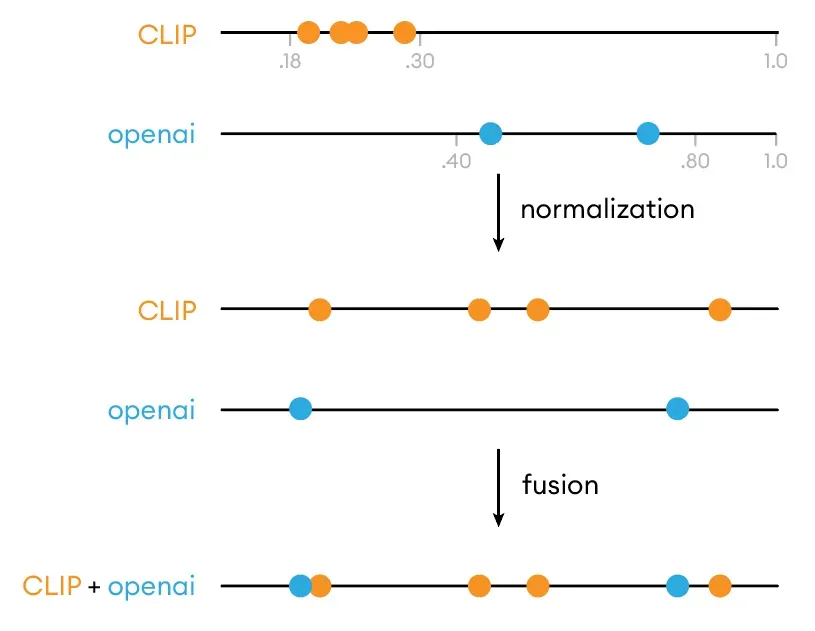

The only way to merge the scores of the two searches was to normalize the results (the score of each search has a relative relevance; it would be like trying to compare pears with apples), but this approach results in the first element of both searches always reaching the top. In a few words, if one of the two searches was successful and the other one a failure, I would be forced to include the first sample of the failed search at the top.

My solution was to avoid normalizing the score (which uses a default range of [0, 1]) and use a relative minmax range for each search established by looking at the edges of the cosine score distribution of each model. I named this new fusion algorithm distribution-based score fusion (DBSF). One year later, being its main variation a combination of BM25 and text retrieval, it has been recognized as the #1 algorithm for text search.

When used in document search, its lack of flexibility (words only contribute to the score if there is a perfect match, ex. “shirt” and “shirts” are not BM25 matches) lets us filter out more noise. However, for use cases such as the retrieval of e-commerce and retail data, the BM25 retrieval is not optimal because the amount of text at our disposal is much smaller than a paragraph (it is no longer than a sentence). DBSF will fail, when applied to e-commerce search.

DBSF+

The only way to make this fusion algorithm more flexible is to improve the text retrieval. The string algorithms that can accomplish this are called fuzzy algorithms (there should be 8), and the best algorithm for the job is called Ratcliff/Obershelp and is essentially one of the possible fuzzy searches that matches substrings rather than full words. I call this variation DBSF+ as a dedicated improvement for this specific use case.

String scoring

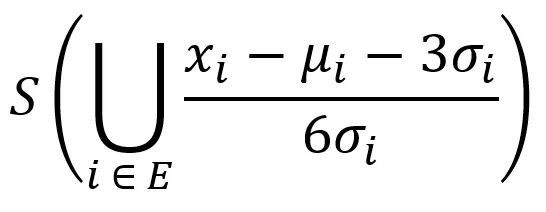

Unfortunately for us, any fuzzy search algorithm returns a score which is not confined to any range. The easiest solution (as I did for BM25) it is to compute the score for each matching word inside the query and finally divide it by the number of elements. This formula provides us with a score within the range [0, 1] that we can use to make a proper fusion.

A practical example

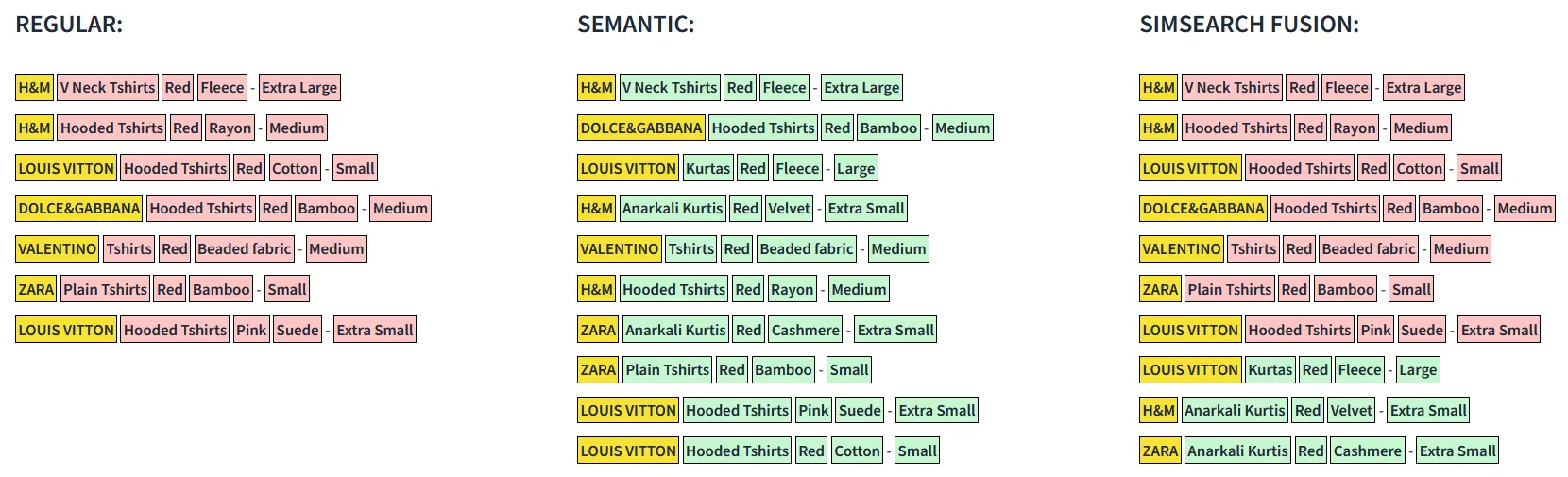

Let us look at cases where DBSF+ can outperform DBSF. Do recall that we are using retail data; if this algorithm were to be applied to documents, it would probably underperform, retrieving piece of strings that appear in the query, but they are semantically unrelated.

Query: "one redd tshirt"As we can see from the query (REGULAR=string, SEMANTIC=semantic, SIMSEARCH FUSION=dbsf+), the word “redd” has been misspelled on purpose, yet the correct results have still been caught. BM25, as explained, would not be able to match “redd” with “red”, causing the string score to be irrelevant when compared with the semantic search.

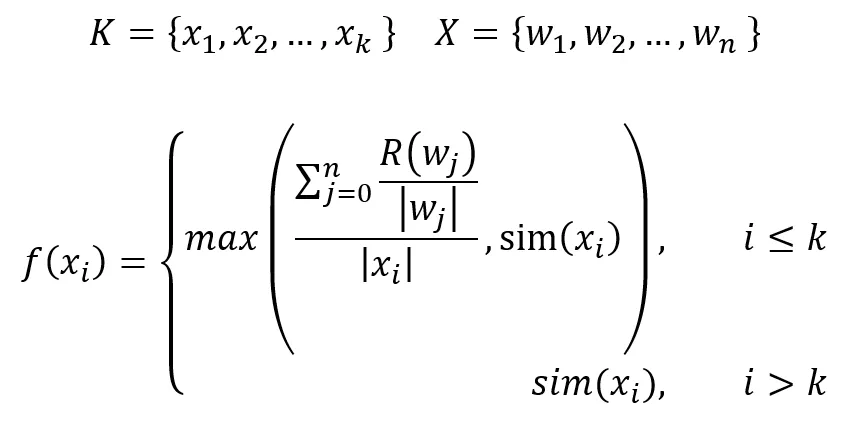

The scoring formula for DBSF+ can be expressed using the following function. Being:

- K the set of the top semantic results (arbitrarily chosen) on top of which we are applying the string scoring

- X the set of separate words w assigned to each corresponding x

- sim(x) the cosine similarity score of a sample x

- R(x) the score of the Ratcliff/Obershelp fuzzy algorithm

Rather than running a parallel string search, given the string retrieval capabilities of the encoder, we are applying the score to only the top k results, only running a single search while saving a substantial amount of resources.

See More Posts

Cardy

Copyright © 2021 Govest, Inc. All rights reserved.